Digital Futures Lab/Issue #28: 'Courts of Tomorrow: Justice in the Age of AI, Urvashi in MIT Sloan Management Review India's Policy 50, Human in the Loop at FOSS & Spotlight on Capacity-Building

This month, at Digital Futures Lab, we continue to bridge research, policy, and practice, shaping global discourse on AI governance, while strengthening local capacity for responsible innovation. We spoke at convenings, facilitated workshops with partners designing social-impact AI solutions, and took Human in the Loop to IndiaFOSS United!

Our engagements across Goa, Bangalore, and global platforms reflect a shared commitment: ensuring that innovation in AI is matched by equal investment in trust, inclusion, and accountability.

Here’s what we’ve been building, learning, and reading this month. ⬇️

events 🎤

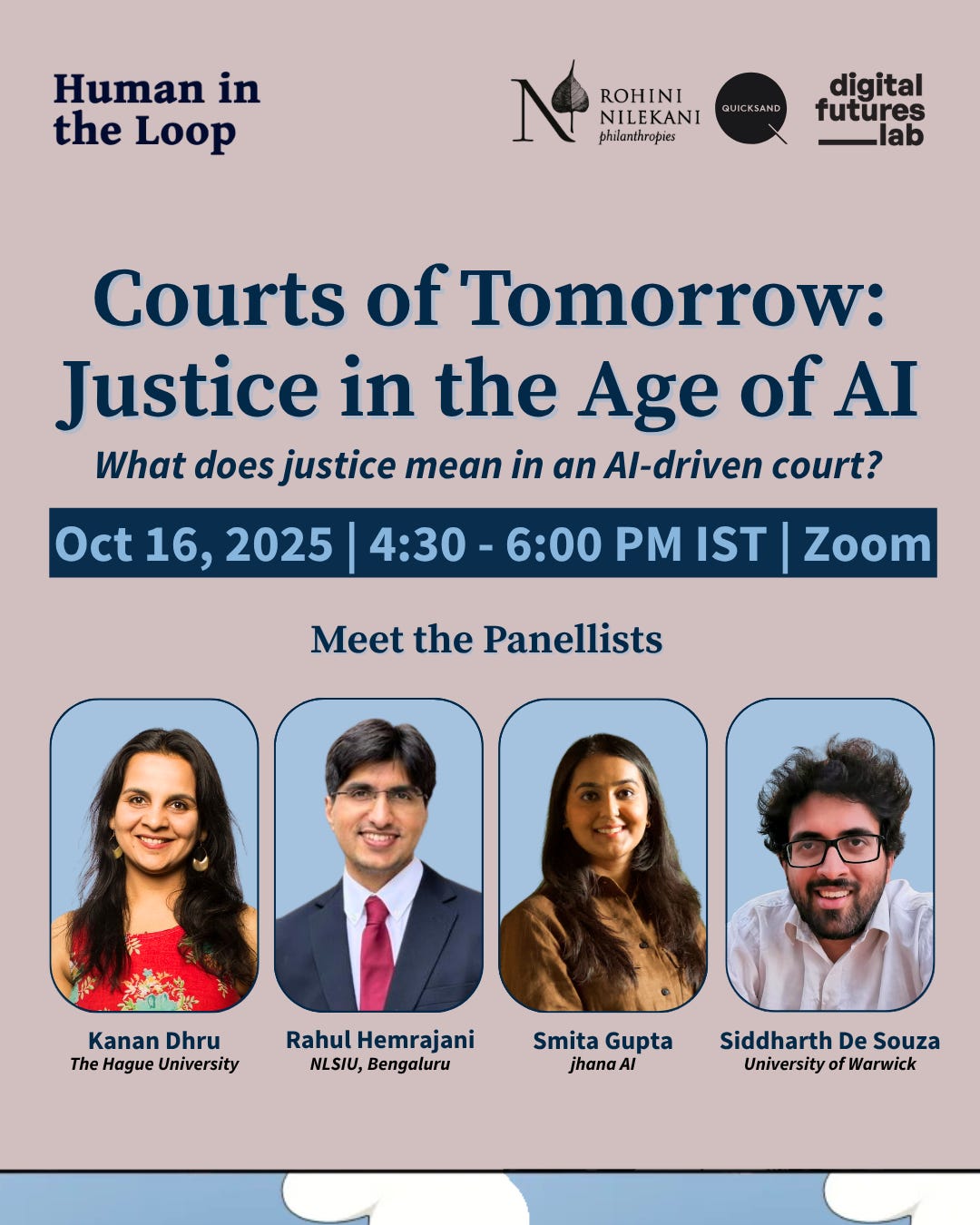

On October 16, we’re hosting the second virtual panel in our series, Human in the Loop (supported by Rohini Nilekani Philanthropies): ‘Courts of Tomorrow: Justice in the Age of AI’.

This panel will build on the comic, ‘Premium Justice,’ a speculative story set in a near-future Tamil Nadu where the judicial system turns to AI to reduce staggering backlogs and speed up decisions, and our techno-optimist protagonist, Judge Nathan, embraces the promise of efficiency, until he realises how profoundly the system has shifted. We will be joined by:

Kanan Dhru, The Hague University of Applied Sciences and Ritam Ventures

Rahul Hemrajani, National Law School of India University, Bengaluru

Smita Gupta, jhana

Siddharth Peter de Souza, University of Warwick and Justice Adda

Register now!

Research Associate Anushka Jain was at the ‘Network of Women Politicians Meeting on Regulation of AI in India: Applying a Gender Lens’. Organised in Goa by Friedrich-Ebert-Stiftung India, this one-day convening brought together women professionals and international experts to reflect on how India can pursue its vision of becoming a global AI powerhouse while safeguarding against risks of AI and GenAI. Anushka presented and spoke on ‘Gendered AI Harms and Specific Areas of Regulation’, leading to a discussion that underscored the need to balance innovation with responsibility to ensure inclusive and accountable AI futures.

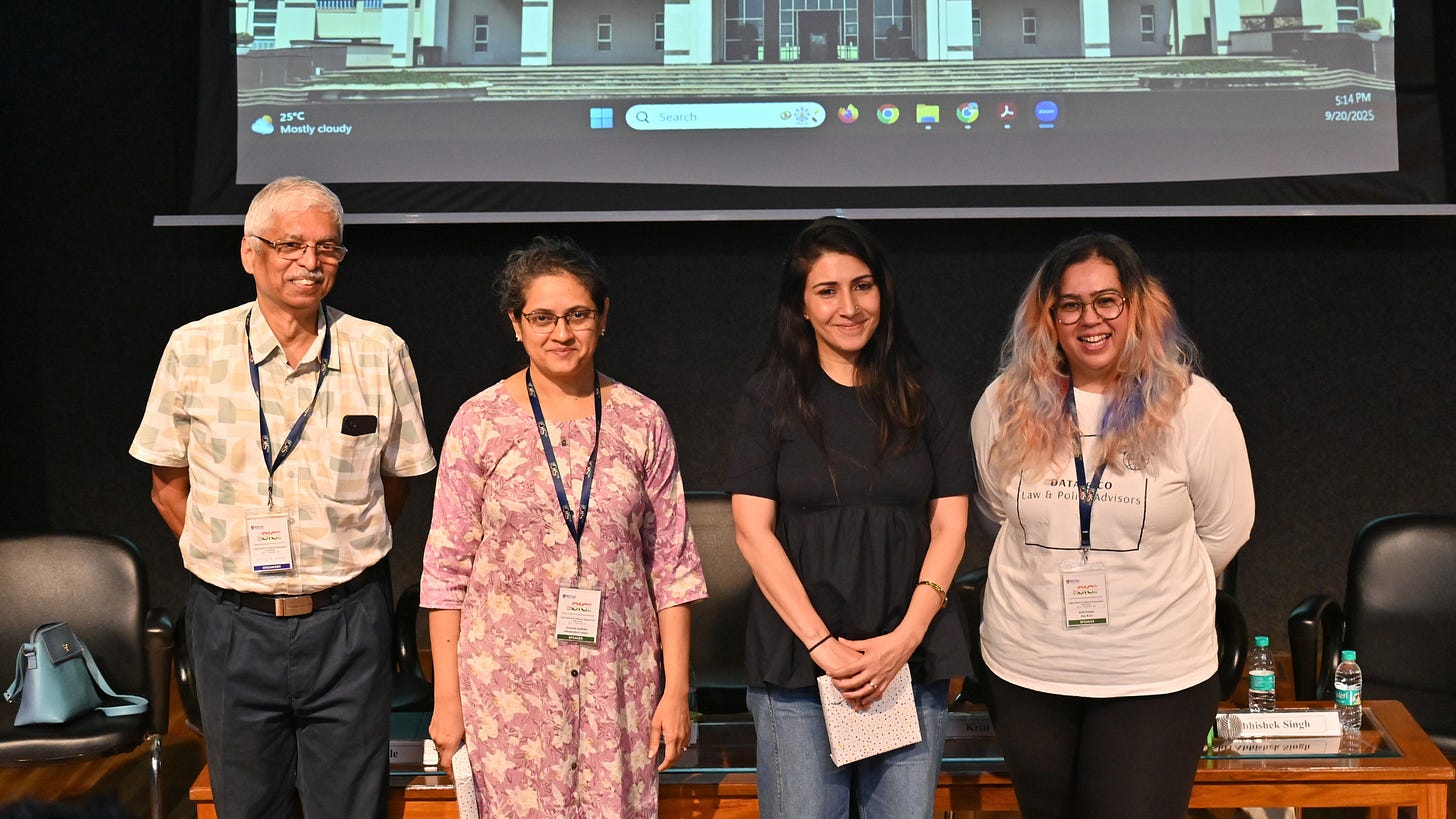

Founder and Executive Director Urvashi Aneja was invited to speak at inSIG 2025 at the panel, ‘Governance of AI and Emerging Tech’. The session opened with a keynote by Mr Abhishek Singh, Additional Secretary, MeitY, and was moderated by Kriti Trehan (Data & Co.). Ms. Gowree Gokhale joined Urvashi as a co-panellist. The discussion centred on how policy frameworks, standards, legislation, and regulations can be designed to govern AI and emerging technologies — ensuring critical issues such as human rights, data privacy, copyright, intellectual property, algorithmic transparency, and security are effectively addressed.

As the Knowledge Partner on Responsible AI for Project Tech4Dev’s AI Cohort Program, we recently facilitated a workshop in Bangalore on a critical question: What happens when trust in AI tips into over-reliance?

The Program is currently supporting seven NGOs in India as they design and scale responsible AI solutions, with our team facilitating thematic workshops and offering tailored advisory, based on our Responsible AI Fellowship. In Bangalore, Research Associates Sasha John and Shivangi Sharan led participants through discussions and activities on automation bias, confirmation bias, and overestimating AI explanations, highlighting why AI literacy and friction-in-design are key for building effective and responsible systems.

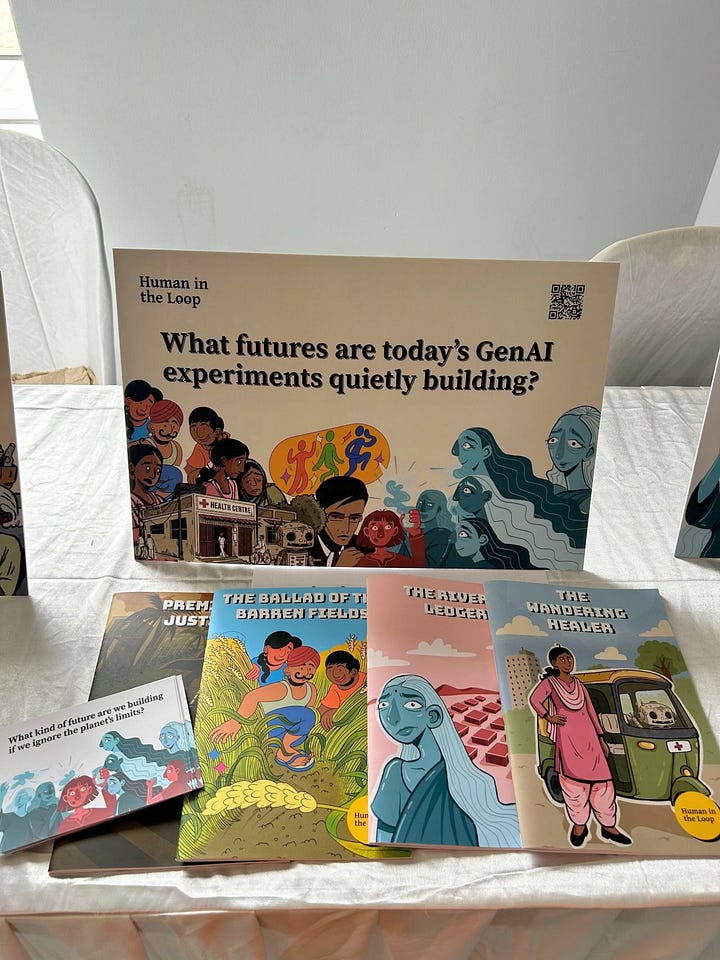

Human in the Loop travelled to Bangalore for IndiaFOSS United 2025! At our booth, Sasha introduced the series to the community and shared how we’re using participatory foresight and storytelling to surface the near-future risks and unintended consequences of Generative AI in India. Over two days, we engaged with over 200 technologists, developers, students, and researchers, triggering conversations around the comics, their provocations on AI futures, and the power of storytelling.

media ✍🏽

Urvashi was featured in the MIT Sloan Management Review India Policy 50 — a list of leaders shaping India’s digital economy. The Policy 50 recognises those whose work anchors this growth in innovation, governance, and public trust. Urvashi’s inclusion reflects her contributions to ensuring India’s digital rise is equitable, future-facing, and globally engaged. Read more here.

partnerships 🤝🏽

Urvashi joined over 300 global leaders, Nobel laureates, and pioneers as a signatory of the Global Call for AI Red Lines. Backed by 90+ leading organisations, the initiative urges governments to agree on red lines for AI by the end of 2026 to prevent the most severe risks to humanity and global stability. Learn more here.

what we’re reading 📖

Harleen

📕 OpenAI is huge in India. Its models are steeped in caste bias by Nilesh Christopher

Shivranjana

📕 ‘Existential crisis’: how Google’s shift to AI has upended the online news model by The Guardian

Aarushi

📕 The Hidden Cost of Quick Wins: How Artificial Intelligence in Administrative Appeals Risks Trust in Justice by Joe Tomlinson, Jed Meers, Izzie Salter, and Phillip Garnett

Spotlight: Building Capacity for the Age of AI 🔦

The narrative of AI in India is often built on infrastructure and innovation: compute power, datasets, and models. But another kind of infrastructure matters just as much: the human and institutional capacity to build, question, and sustain this technological evolution responsibly.

As AI tools become embedded in governance, climate response, and development programs, capacity building has emerged as one of the most critical and least visible challenges. Who gets to build, who knows how to use, and who can critically evaluate these systems to even decide whether a problem can be solved by them? These aren’t merely technical questions but social ones that shape whether AI deepens existing inequities or helps close them.

At Digital Futures Lab, our capacity-building efforts reflect this evolving landscape. From the Responsible AI Fellowship that served as a programmatic hub for peer learning to our current role as Knowledge Partners on RAI with Project Tech4Dev’s AI Cohort Program, we have been working with organisations as they design, test, and evaluate their interventions in real time.

1. From frameworks to practice

Capacity building goes beyond training programmes or toolkits. It is about creating enabling ecosystems, from regulatory understanding to technical mentorship, that allow stakeholders and decision makers across the ecosystem to move from awareness to implementation. This means recognising multiple layers of capacity: Individual capacity to understand and interrogate AI systems; Organisational capacity to design, deploy, and sustain ethical interventions; and Ecosystem capacity involving funders, governments, and research networks that shape how responsible innovation takes root. Further, capacity building is crucially, more than just learning to use tools. It is also about knowing their limits and building the discernment to decide when not to use them.

2. The missing middle: Sustained and interdisciplinary support

Short-term accelerator programs or fellowship cycles can ignite awareness but often fall short of supporting deep structural change. Many social-impact organisations are pressed for time, balancing resource constraints with urgent programmatic goals. What’s needed instead is long-term, cross-sector collaboration, where research organisations, technical partners, and funders work together from the design phase onwards. Technical partners help translate responsible AI frameworks into buildable realities, while funders enable consistency beyond pilot phases. Sustained investment in responsible innovation is crucial to ensure pilots evolve into scalable, safe, and effective interventions.

3. Building capacity across the AI supply chain

Capacity building also means building discernment across the AI supply chain — from data policy to user literacy. Many organisations working with sensitive community data might not be pre-equipped with internal policies around consent, data deletion, or model transparency. Addressing these requires more than technical training; it demands rethinking institutional norms and setup.

At the same time, end-user capacity or AI literacy remains underexplored. India has made strides in digital literacy, but AI literacy demands a different kind of engagement: one that tests how users understand, trust, and engage with AI systems. Without this, even well-designed interventions risk disempowering users rather than enabling them.

4. Towards a culture of responsibility

Capacity building, at its core, is about cultivating a culture of responsibility, not compliance. It asks institutions to build the capacity to pause, question, and adapt. If foresight helps us imagine the futures we want, capacity building ensures we have the skills, ethics, and collaborations to get there. In an age where AI is both infrastructure and ideology, building capacity may be our most important act of innovation.

For deeper insights, take a look at our flagship projects on capacity building:

Responsible AI Fellowship: India’s first capacity-strengthening programme targeted at social impact organisations that helps grow knowledge frameworks, tools, and capacities for Responsible AI, through 1:1 mentoring, peer exchange, and expert lectures. Access and entire library of video lectures, resources, and more.

The Practice Playbook on Responsible AI: A guide to designing AI for social impact in India, co-created with 14 social impact organisations and expert mentors from the inaugural cohort of our Responsible AI Fellowship. The Playbook blends real-world insights with proven frameworks.

AI and Healthcare in India: We’re developing a risk assessment and mitigation framework to ensure the safe, ethical, and responsible adoption of AI in Indian healthcare systems as the country turns to AI-enabled tools to transform overburdened healthcare systems.

AI Ethics in the Global South: Capacity-strengthening and Learning: Through this project, we supported grantees of Gates Foundation’s Global Grand Challenges to advance responsible GenAI in LMICs. We helped shape ethical, gender-equitable AI solutions for social impact through expert guidance, peer learning & research.

Have an idea for a creative collaboration or a research partnership? Reach out to Shivranjana at shivranjana@digitalfutureslab.in or write to hello@digitalfutureslab.in