Digital Futures Lab/Issue #25: Human in the Loop, New Publications & On Responsive Research & Policymaking through Foresight

This past month, Digital Futures Lab has been exploring what it means to do responsive (instead of reactive) research and policymaking in the age of rapid technological change.

From the launch of Human in the Loop—our latest participatory foresight and speculative storytelling-driven project on the near-future risks of Generative AI in India—to new publications and partnerships, we continue to ask: how can research not just describe the world, but shape it for the better?

Here’s what we’ve been up to. ⬇️

research 📑

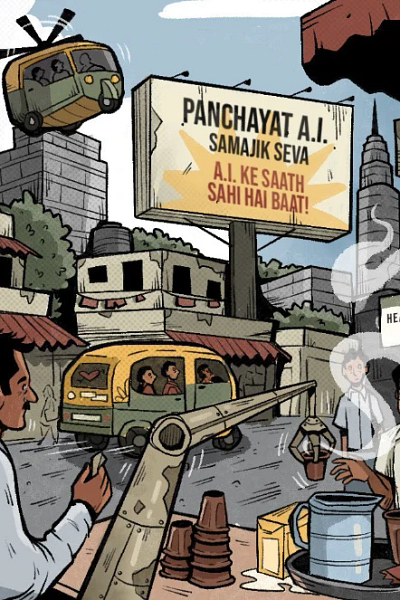

DFL is excited to share our latest research endeavour, one in a long line of work that marries foresight methods, creative storytelling and public engagement. Human in the Loop is a participatory foresight and storytelling project examining the near-future risks and unintended consequences of Generative AI in India.

The stories and accompanying essays explore the potential impacts of Generative AI across diverse domains—from efficiency-driven applications in healthcare and agriculture to the environmental costs of unchecked AI development. They reveal both immediate effects and slower, often overlooked shifts that shape human agency, relationships, and social norms. Check out the first two stories in the series!

We’re also excited to announce DFL’s latest initiative in collaboration with the Centre for Responsible AI (CeRAI) at IIT Madras: a context-aware, actionable framework to identify and mitigate the risks of AI across India’s healthcare ecosystem. Learn more about ‘From Principles to Practice: Responsible AI in Indian Healthcare’ from this blog by Research Manager, Harleen Kaur, and Research Associate and IIC Fellow, Aayushi Vishnoi.

An online collection of writings on Generative AI in the Global South, edited by Sr. Research Manager, Aarushi Gupta, in collaboration with the Global Centre on AI Governance in South Africa is out now! The collection brings together insights from pilots involving the deployment of LLMs in developmental settings across Asia and Africa. It was part of our recently concluded project, ‘AI Ethics in the Global South: Capacity-strengthening and Learning’, under which DFL was part of a network of experts supporting the grantees of the Gates Foundation’s Global Grand Challenges 2023 program on “Catalysing Equitable Artificial Intelligence (AI) Use”.

Sr. Research Associate, Dona Mathew, and Founder and Executive Director, Urvashi Aneja, contributed to stakeholder engagement for Arup’s report, AI for Sustainable Development in ASEAN: Advancing Safe, Trustworthy and Ethical AI for Climate Action, commissioned by the UK Foreign, Commonwealth and Development Office (FCDO). They shared insights on the intersection of AI and sustainability in South and Southeast Asia, highlighting key challenges related to climate data, infrastructure gaps, and the environmental impacts of AI.

If you haven’t already, check out our Practice Playbook on Responsible AI - a guide for designing, developing, and deploying Responsible AI (RAI) interventions for social impact organisations. Building on insights and best practices from our Responsible AI Fellowship, the Playbook is a practical, field-informed guide grounded in real-world challenges and established scholarship on RAI. It also includes concrete examples of real-world implementation and a curated set of tools and resources recommended for AI developers.

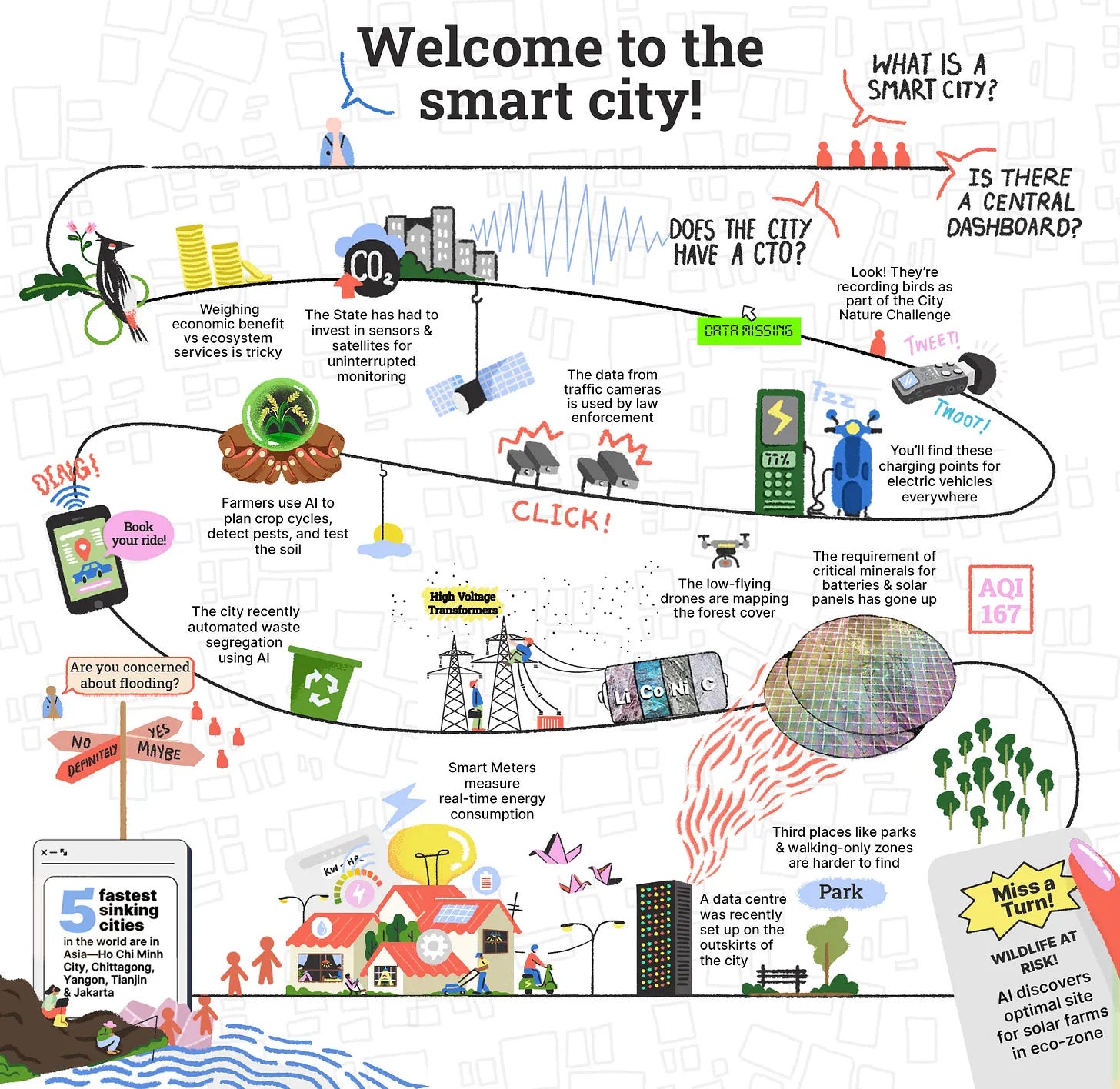

The latest issue of the Code Green newsletter asks a big question about the future of AI in urban governance: ‘Given the dual challenges of climate change and rapid urban expansion, how sustainable will the cities of the future truly be?’

You can supplement the newsletter by listening to the latest Code Green podcast episode on “locating sust(AI)nability in the smart city”. Guests, ChengHe Guan and Raj Cherubal, discuss the future of digital urban transformation, with a focus on smart and sustainable city initiatives in China and India.

events 🎤

Dona delivered a keynote titled ‘The Human and Environmental Costs of the AI Race’ as part of SFLC.in’s ‘AI in Conversation’ series. She emphasised the resource-intensive nature of AI development, particularly in the context of generative AI, and underscored the need for comprehensive impact assessments across the AI supply chain, while also raising awareness of AI's long-term impacts on individuals, communities, and the environment.

Dona also joined a panel discussion on the challenges and opportunities of ML for Climate Action in Asia at Climate Change AI’s ICLR 2025 Workshop: Tackling Climate Change with Machine Learning.

Urvashi joined global leaders, including HE Bart De Wever, Prime Minister of Belgium, at the global launch of the 2025 Human Development Report. She spoke on the panel, ‘A matter of choice: People and possibilities in the age of AI’, where she and the other panellists explored the choices shaping AI-augmented human development — and what’s at stake. Watch here:

DFL co-hosted the Asia regional consultation on data governance for EdTech with UNICEF and Tech Legality, as part of our role in the expert advisory group for UNICEF’s project on data governance for children. Drawing on insights from the earlier global consultation held in March in Florence, Aarushi also wrote a piece on data governance and edtech in India for UNICEF’s website.

media ✍🏽

Aarushi recently joined Shashank Mohan and Tejaswita Kharel, hosts of The CCG Tech Podcast, to discuss the potential gendered consequences of relying on AI systems that may replicate and reinforce existing inequalities as these tools become the default sources of knowledge and assistance across various domains.

what we’re reading 📖

Dona

📕 We did the math on AI’s energy footprint. Here’s the story you haven’t heard. by MIT Technology Review

Sasha

📕 Supportive? Addictive? Abusive? How AI companions affect our mental health by Nature

Aarushi

📕 Consulting the Record: AI Consistently Fails the Public by AI Now Institute

Spotlight: Foresight and the Futures of India’s Tech (and AI) Policy 🔦

Policymaking is, at its core, an act of anticipation. It looks to the future, maps trends, and involves making decisions in the present to shape outcomes for tomorrow.

But what happens when the pace of change outstrips our ability to anticipate? Technologies like Generative AI outpace conventional policymaking timeframes, reconfiguring entire systems before we’ve even finished diagnosing their first impact. This leaves countries and systems in technological and policy lock-ins that are hard, or impossible, to turn back from. For example, what would have happened if we had thought through the impacts of social media back when Facebook first came out? Instead, we are only now getting around to questioning Meta about its anti-competitive practices or the harm caused by the kind of misinformation/disinformation it enables.

In such contexts, linear and immediate impact-focused tools of planning often fall short, prompting a new question: Can we reimagine policymaking itself to be more agile and proactive than reactive? One that accounts for layered, complex, and multi-pronged futures systematically, where it is not just about predicting what will happen, but about preparing for what could happen tomorrow, and choosing which kind of future pathways we can work towards today.

At Digital Futures Lab, we’ve been exploring these questions through our use of foresight methodologies across our flagship research projects, looking at the intersection of technology and society. Foresight helps us think beyond the immediate and into possibilities, not just to inform policy but to strengthen research itself. As a methodology, it equips researchers with tools to explore multiple futures, surface emerging patterns, and uncover the layered, often unexpected ways technological developments impact society.

From scenario-building and speculative design to horizon scanning and backcasting, foresight methods help expand the questions we ask, challenge dominant narratives, and anchor our work in long-term, systemic thinking. It allows us not only to imagine different futures, but to rigorously examine how we might shape them.

We believe that in India and the Global South, policymaking – especially tech policy – needs to be more agile, proactive, and closer to the imaginaries of futures that we desire to work towards truly equitable and just digital futures. Using foresight to study and anticipate impacts of GenAI across labour, climate, gender, misinformation, and justice systems, here are some of our core insights:

1. Foresight is structured speculation, not ungrounded guesswork

Foresight flips the script on how we engage with emerging technologies. It uses grounded expertise, not fantasy, to map plausible futures. Especially in domains such as climate or contexts such as the Global South, where empirical evidence might be lacking, it allows researchers to anticipate harms, navigate ambiguity, and surface structural inequities before they are locked into policy or product design.

2. Foresight can help Indian policy move from reactive to resilient

India’s policy systems are often reactive, marked by fragmented processes, short timelines, and overwhelmed institutions. Foresight can provide structure amid this complexity, enabling longer-range thinking, surfacing first-, second- and third-order effects, and creating space for diverse voices. But institutionalising foresight in India means bridging the language of speculation with grounded, policy-ready outputs.

3. Foresight helps structure imagination in high-velocity tech spaces

In fields like AI and climate, where change is rapid and unpredictable, foresight offers a way to stay ahead of the curve. It doesn't simplify complexity, but organises it, making ambiguity manageable, and futures imaginable. It helps policy and research stay relevant even when systems are still in flux.

4. Foresight turns research into strategy

Where traditional research ends with diagnosis, foresight pushes toward direction. It doesn’t just ask “what’s happening,” but pushes people and institutions to anticipate “what could happen next?” and hence, “what should we do today?” Through tools like backcasting and consequence mapping, foresight makes the future accessible, helping decision-makers act on uncertainty rather than wait for evidence to catch up. When we conducted foresight exercises with our expert network of researchers across Asia as part of the ‘AI+Climate Futures in Asia’ project, it was initially difficult to imagine anything beyond dystopian futures. The data from the present, coupled with the rapid, unpredictable pace of climate and technological change, made it hard to envision hopeful outcomes. In this context, backcasting proved especially useful: it helped experts work backwards from a desired future scenario to identify the actions that can be taken now to make that future possible.

5. Foresight approaches can help make policy more easily accessible

Foresight approaches can also serve as powerful communication tools, helping make the unknown more graspable. In the face of uncertainty, visual and narrative formats like scenario maps, speculative comics, and storytelling help surface complex possibilities in ways that resonate. These visual outputs help translate layered insights into actionable, memorable prompts for public dialogue and policy thinking.

6. Foresight is a way to ask: Who are we innovating for? Representation is a matter of intention.

Foresight methodologies create space for policymakers and researchers to collaboratively return to this foundational question. They offer a means to engage with the wider public and bridge the gaps between policy, academia, and the communities they aim to serve. By surfacing what people are thinking, feeling, and imagining, foresight invites more grounded and inclusive visions of the future. At its core, “fully-fledged foresight is prospective (decidedly future-oriented), policy-related and participative.” But participation is not a given. It requires intentional design and skilled facilitation to ensure diverse voices are not only included but meaningfully engaged. Without that, foresight risks reinforcing dominant narratives or flattening community expertise.

For more such insights, take a look at some of our flagship projects using these methodologies:

Human in the Loop: Our latest and ongoing project, this is a participatory foresight and storytelling project examining the near-future risks and unintended consequences of Generative AI in India.

AI + Climate Futures in Asia: Anchored by us, a network of experts from 9 Asian countries came together to map the current landscape of AI and Climate Action in Asia, and identified emerging trends and signals of change , built speculative future scenarios, spoke with leading policy experts, and developed a set of recommendations for development organisations.

Future of Work: Unpacking the impact of rapid GenAI adoption on India’s future of work to identify key levers of change and policy pathways towards inclusive labour futures.

Provocations on AI Sovereignty: Developed from reflections on the matter with 18 of India’s leading AI thinkers, this report explores what digital sovereignty means, assesses its feasibility, examines who benefits from such an agenda, and unpacks the new policy conundrums it raises.

Can Digital Public Goods Deliver More Equitable Futures?: Urvashi’s brief in this UNDP RBAP collection of foresight briefs explores how digital public goods can advance sustainable development without deepening inequities. It questions whether current interpretations of openness and community orientation truly serve vulnerable populations.

Work 2030: Scenarios for India: Four future scenarios pertaining to the likely impact of emerging Fourth Industrial Revolution (4IR) technologies on the future of work in India.

Have an idea for creative collaboration or a research partnership? Reach out to Shivranjana at shivranjana@digitalfutureslab.in or write to hello@digitalfutureslab.in