Digital Futures Lab/Issue #26: New Human in the Loop Comics, DFL at ACM FAccT Conference in Greece, Rounding up Code Green, Open Source AI and More!

We’re a little late with this newsletter (sorry!), but we have good reason – we’ve been scheming for the future! More on that in the next newsletter! 👀

But, we’ve always got a pulse on the present: we produced new work on the impact of AI on the climate and climate action, spoke at panels, research conferences, and workshops on topics as varied as open-source AI, mitigating gender bias in LLMs deployed in India and AI-mediated intimacy, and were quoted in major publications.

Here’s what we’ve been up to this past month. ⬇️

research 📑

We are excited to share two new stories, The River’s Ledger and Premium Justice, under Human in the Loop, a participatory foresight and storytelling project examining the near-future risks and unintended consequences of GenAI in India. Download them below!

DFL’s Founder and Executive Director, Urvashi Aneja, and Aarushi co-authored a report titled, ‘Mapping the Potentials and Limitations of Using Generative AI Technologies to Address Socio-Economic Challenges in LMICs’. Drawing from the experiences of LMIC-based innovators and 36 real-world use cases across sectors like health, education, agriculture, financial inclusion, and women’s empowerment, this article argues that while GenAI holds significant potential to accelerate progress on developmental goals, serious risks and systemic barriers must be addressed.

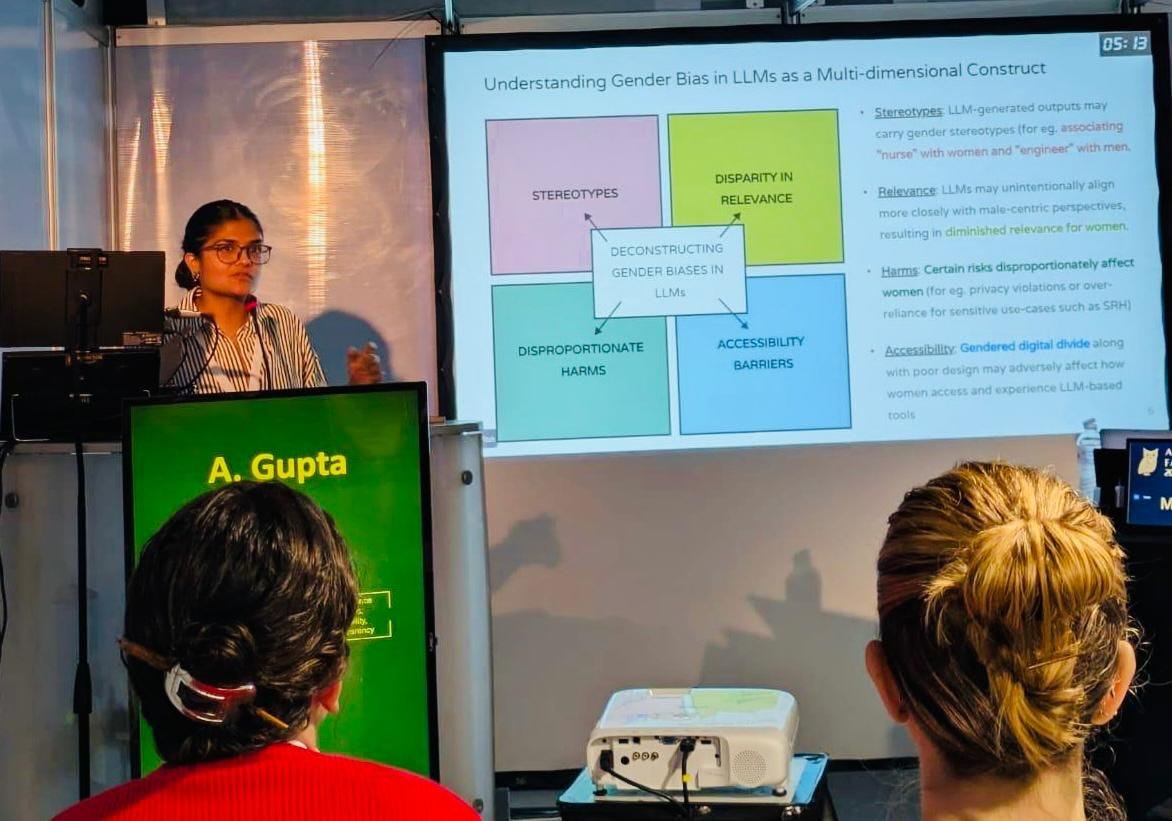

Aarushi presented a paper examining gender biases in LLMs deployed within low-resource contexts in India based on our previous work at the recent ACM FAccT Conference held in Athens, Greece. Read the full draft of the paper now!

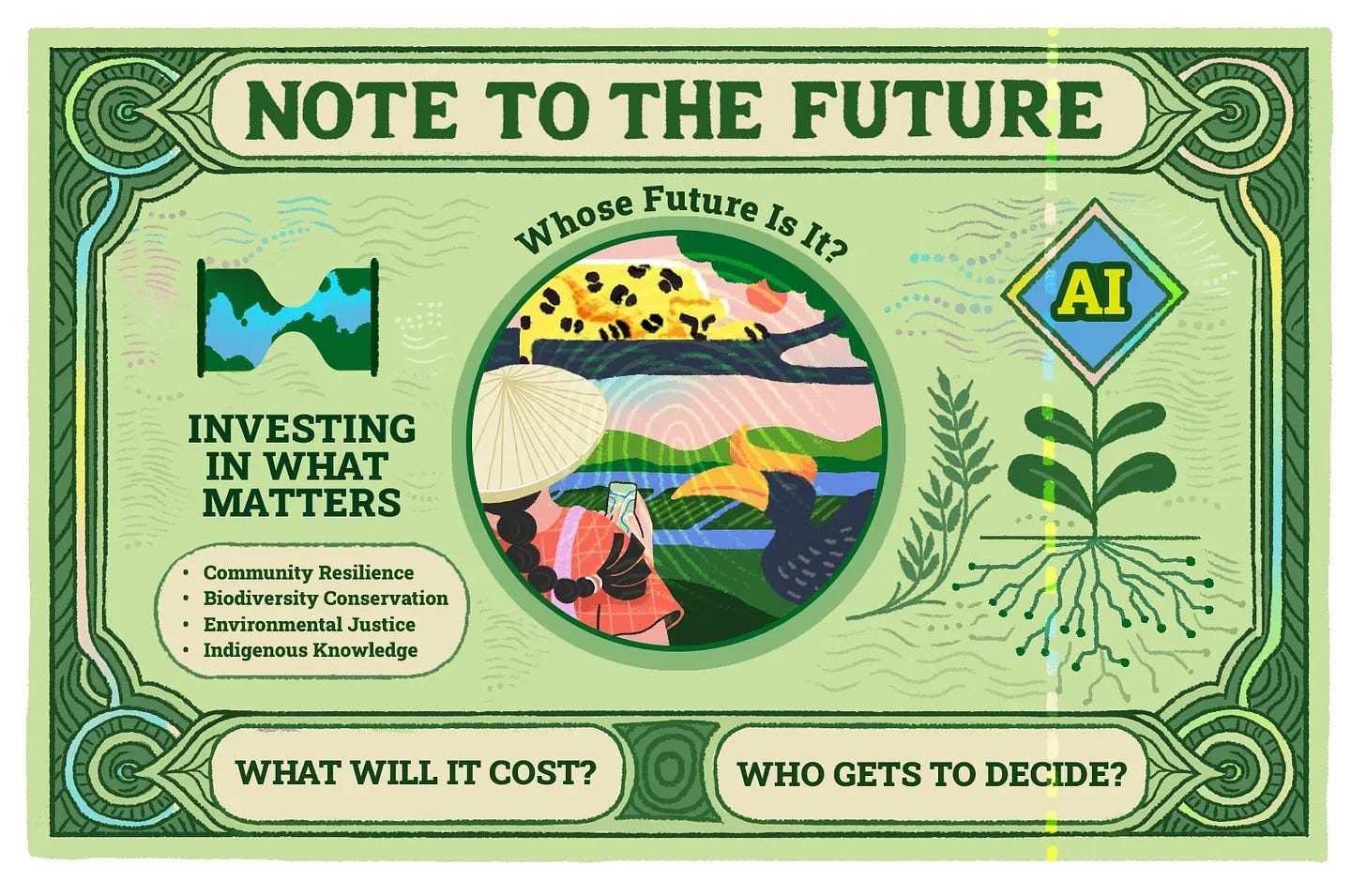

In the latest (and final!) issue of the Code Green newsletter, we look at innovative funding mechanisms, the limits of climate-tech entrepreneurship and pathways towards technological ‘post-growth’ for just and sustainable climate futures. As the intersection of AI and climate action gets more complex than ever, we ask: what solutions aren’t working, and how do we resolve tensions in AI’s promised climate breakthroughs and its negative environmental costs?

You can supplement the newsletter by listening to the latest Code Green podcast episode on “Funding & Framing Asia’s Climate Futures”. Guests Hanyuan ‘Karen’ Wang and Luis Felipe R. Murillo weigh in on the importance of data stewardship and governance, the need for cross-sector partnerships and the need for localised data and tools to navigate the climate compliance landscape.

Note to Code Green readers and listeners!

This is the final instalment in the series! We've loved curating these monthly dispatches for you. As we plan the next chapter and look for ways to keep the Code Green community thriving, we’d love your input: What articles or topics resonated with you? What would you like to see more of? Drop us a note at codegreen@digitalfutureslab.in — your ideas might just inspire what’s next!

events 🎤

Research Associate and IIC Fellow Aayushi Vishnoi recently participated in an immersive visit and guided tour of the Sansad Bhavan and the New Parliament House as part of her fellowship with the International Innovation Corps. The visit also included an interactive session with Mr Ravindra Garimella, Secretary to the Leader of Opposition, who shared valuable insights on parliamentary functioning and legislative processes.

Aarushi was invited to speak on gender biases in AI at Takshashila Institution’s Academic Conference held on June 1, 2025. She spoke to the Institution’s Graduate Certificate in Public Policy course students, detailing the social biases embedded across the lifecycle of large language models. She was also a panellist on an AI and Public Health Session hosted by the George Institute for Global Health where Aarushi joined healthcare experts and data scientists in a conversation on the opportunities and challenges of using AI for healthcare delivery in low-resourced settings in India.

ICYMI!

In November 2024, we closed the inaugural cohort of DFL’s Responsible AI Fellowship with the RAI Symposium at Museum of Art and Photography (MAP), Bangalore. The following trailer captures the energy, ideas, and momentum of the day–which, in retrospect, could be a full-day of thinking together. As we look towards the next steps, you can use this form to express your interest in the 2025 cohort!

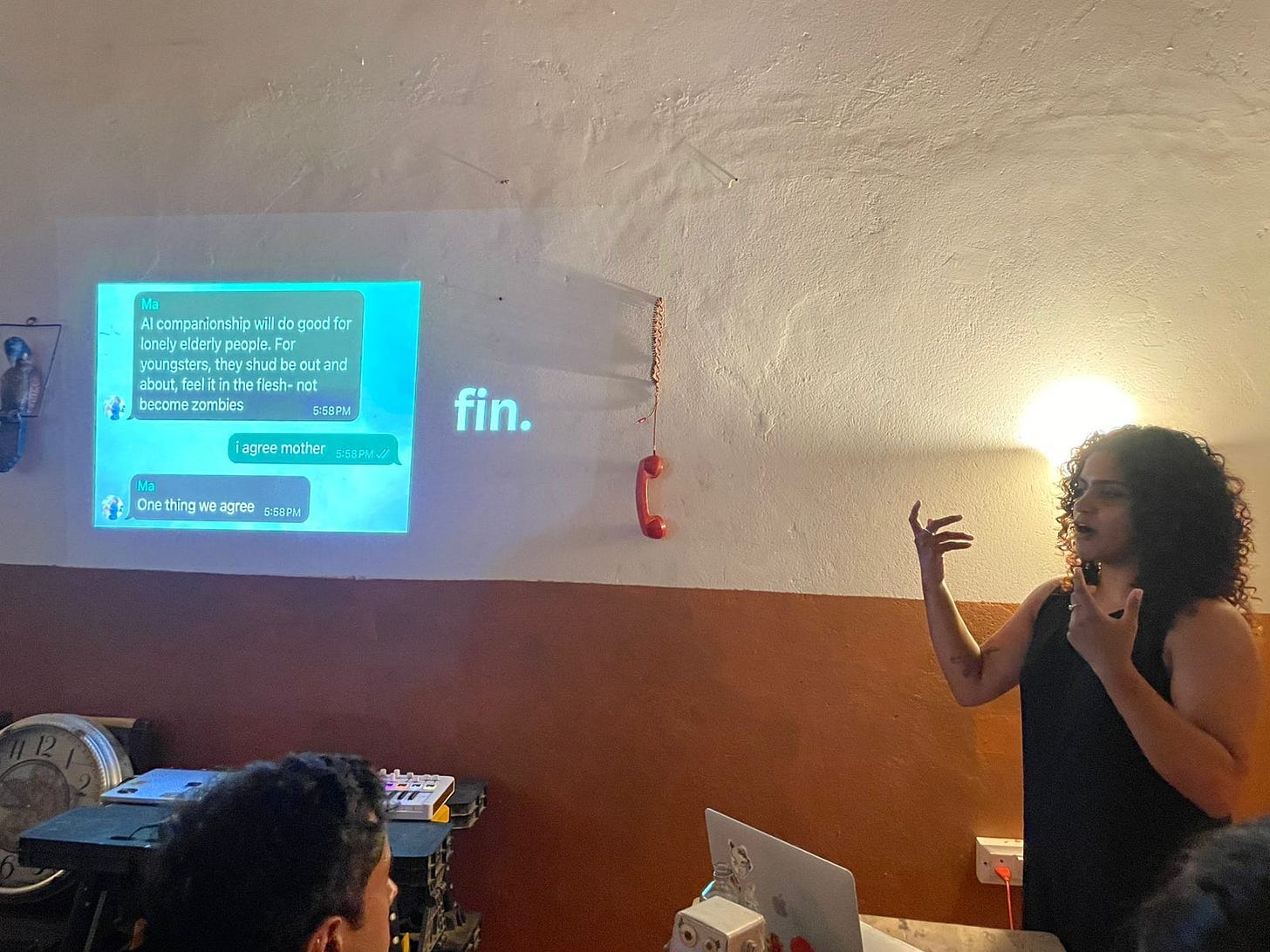

Research Associate Sasha John was invited to speak about AI-mediated intimacy at the inaugural session of The Museum of Imagined Future’s ‘Last Call for The Future’ series in Goa - an event series where technologists, thinkers, and artists gather at a bar for short talks on tech, culture and the future. Sasha spoke about how the current state of companion AI bots and the public’s reliance on ChatGPT for therapy and emotional support indicates a potentially troubling trajectory for the future of relationship-building and general emotional well-being.

Urvashi, Aarushi, and Research Associate Anushka Jain hosted a one-day workshop with invited experts in Delhi as part of our ongoing project on developing Policy Options for Open Source AI for India, in partnership with GIZ and NASSCOM. At the workshop, Aarushi provided an overview of the initial draft of the policy brief developed by the team, providing participants with an opportunity to provide insights. The team also sought specific developer-level and ecosystem-level recommendations from the experts.

media ✍🏽

Sr. Research Associate Dona Mathew was quoted in a Medianama article on how OpenAI’s ‘Sign in With ChatGPT’ could expose user data across apps. She mentions users who reported the system picking up personal information like location, even when it wasn’t explicitly shared in conversations, highlighting “the cumulative impact of ChatGPT’s ubiquity on user privacy.”

Code Green’s podcast episode on ‘Funding and Framing Asia’s Climate Futures’ was featured in climateaction.tech’s newsletter issue 272.

The Human in the Loop series was mentioned in a Mid-day article as a noteworthy project to watch out for at the most recent Eye Myth Media Arts Festival in Mumbai.

partnerships 🤝🏽

DFL is part of the Global CSO and Academic Network on AI Ethics and Policy, launched on June 26th at the 3rd Global Forum on AI Ethics in Thailand. A link to a press briefing of the Forum, which also mentions the Network’s launch can be accessed here.

Urvashi is serving as a Juror for the 2nd Just AI Awards – Asia Pacific Region! As a Juror, her critical insights will help spotlight innovations that are ethical, inclusive, and rooted in justice-first values.

what we’re reading 📖

Harleen

📕 A.I. Is Homogenising Our Thoughts by The New Yorker

Anushka

📕 Collective Bargaining and Equity in Bollywood: Notes from SAG-AFTRA

Shivranjana

📕 Pro-AI Subreddit Bans 'Uptick' of Users Who Suffer from AI Delusions by 404media

Spotlight: Open Source AI – Between Promise, Power, and Public Interest 🔦

Open source has long been celebrated for enabling collaboration, transparency, and decentralised innovation. In this age of rapidly expanding AI, these ideals are being revisited, and contested. As governments, researchers, and builders seek to develop alternatives to Big Tech-dominated ecosystems, “open source AI” has emerged as a pivotal strategy. But, as the team at Digital Futures Lab shares, what counts as ‘open’ in AI is deeply contested and far from settled.

From licensing grey zones to questions of community ownership, Open Source AI (OSAI) reveals critical tensions: between access and equity, openness and extraction, public value and private interest.

Below, we outline some key questions and themes emerging from our work exploring OSAI in India:

1. Is Open Source AI a technical category or a composite claim?

Unlike open source software, AI systems are made up of multiple components: source code, model weights, training data, evaluation metrics, fine-tuning instructions. Rarely are all of these open. One model may release weights, another methodology, a third data.

What then counts as “open”? Would it be transparency, replicability, affordability? Further, if openness is a composite, how do we guard against open-washing, where the ambiguity of fragmentation becomes a branding exercise rather than a meaningful practice?

2. The value proposition: transparency, innovation, and reduced duplication.

Open ecosystems reduce duplication. When datasets, APIs, or model architectures are available to all, they lower barriers to entry for smaller actors, civic tech groups, and public sector developers, and enable innovation, cost savings, and greater scrutiny.

However, in multilingual and culturally diverse contexts like India, does openness lead to more diverse innovation in practice? In a context where building homegrown, language-aware models requires local data, not one-size-fits-all systems, can open data unlock localised solutions or does it risk being co-opted by better-resourced actors?

3. Voice tech in India shows the stakes of open data, as well as its vulnerabilities.

Our ongoing work on speech datasets in Indian languages highlights what openness looks like on the ground. Through this project, we are looking at how responsible, ethical datasets for low-resource Indian languages are built and sustained to support downstream voicetech applications.

As part of this work, we are tackling some critical questions that surround voice data equity: What does consent look like in low-resource settings? How can we ensure responsible reuse — and avoid communities losing control over the data they help build?

4. How do we think about risk?

Open models may be at risk of being misused and repurposed to bypass safety features and generate harmful content. But is the answer restriction or regulation?

The risks underscore the need for clear, enforceable governance frameworks that build trust, enable accountability. What might be the kind of governance frameworks that can enable openness without losing accountability?

5. What sustains open ecosystems?

Openness has real costs: hosting, maintaining, documenting, translating, governing. It is to be seen what kind of financing models ensure sustainability and independence of emerging open-source AI communities.

For deeper insights, keep an eye out for the publications from these projects looking at Open Source AI:

Open Source AI: Policy Options for India: Making AI systems open-source could democratise the AI ecosystem. But, open-source AI could contribute to newer forms of data extractivism. Through this project, we explore some of these tensions & develop recommendations for Indian policymakers & AI developers.

Voice Technologies for Indian Languages: Best Practices & Recommendations for Responsible & Open AI: In partnership with ARTPARK and Trilegal, and is supported by Bhashini and GIZ, we are working to identify barriers and enablers for open-source voice technologies in India and develop best practices and recommendations for their responsible development and use.

Have an idea for creative collaboration or a research partnership? Reach out to Shivranjana at shivranjana@digitalfutureslab.in or write to hello@digitalfutureslab.in